2026-02-16

30 Scientific Findings That Show How AI Is Transforming Employee Productivity

2026-02-13

Scaling Without Chaos: The Operational Systems Growing Businesses Put in Place Early

2026-02-12

Redefining Employee Experience Through Best-of-Breed Benefits Platforms

2026-02-12

How Modern HR Departments Can Leverage Digital PR for Employer Branding

2026-02-11

A 2026 HR Guide to Managing Third-Party Contractor Safety on Construction Sites

2026-02-05

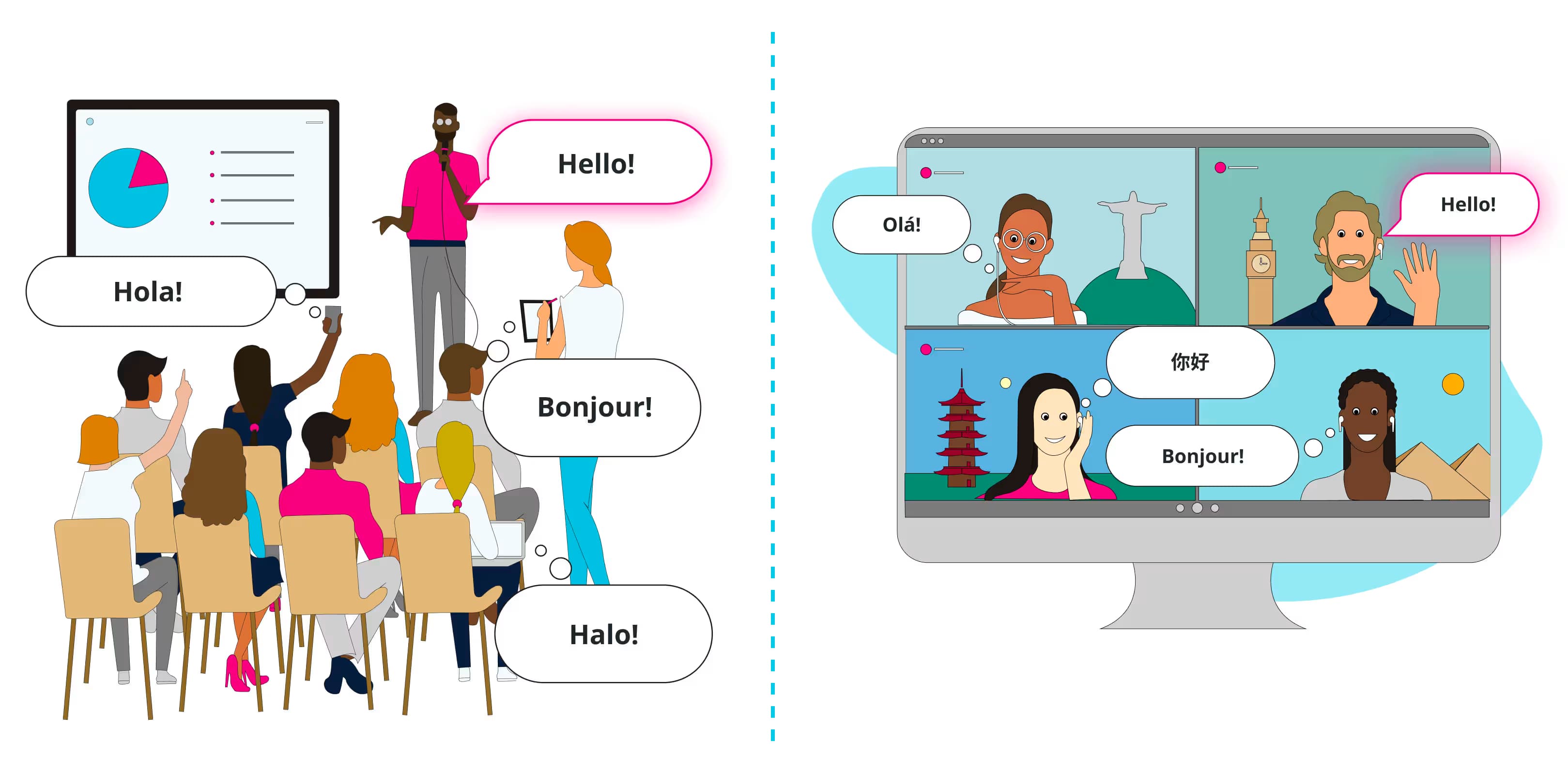

AI Live Translation for HR Onboarding: A Step-by-Step Guide

2026-02-04

Sling Software Review: Comparison, Pricing, and Industry Use Cases

2026-02-04

ClockShark Review: Features, Pricing, Pros, Cons, and Best Use Cases

2026-02-04

Buddy Punch Review: Features, Pros, Cons, and Value

2026-02-04

Agendrix Review: Features, Pricing, Pros, Cons, and Best Use Cases

2026-02-04

Workforce.com Review: The Enterprise-Grade Workforce Platform for Complex Hourly Operations

2026-02-04